Unlocking the Power of Programmable Data Planes in Kubernetes with eBPF

- Lakmal Warusawithana

- Senior Director - Cloud Architecture - WSO2

Programmable data planes offer dynamic control for cloud native applications. Technologies like eBPF and Kubernetes provide an abstraction to improve flexibility, scalability, and performance. Read this article to see how you can use programmable data planes with eBPF, or watch the introductory video for an overview of the concepts.

As demand grows for cloud native applications in today’s fast-paced digital world, traditional data planes must evolve into programmable ones to meet changing needs. Traditional data planes often rely on proprietary hardware, which limits flexibility, scalability, and innovation. This hinders their ability to keep up with the evolving demands of cloud native applications, leading to decreased performance, reliability, and security. In contrast, programmable data planes offer dynamic control and manipulation of network behavior, making them ideal for cloud native applications. They can be used to build cost-effective and scalable network infrastructure using commodity hardware and provide the same level of performance, reliability, and security as more expensive, proprietary hardware solutions.

Lower level network technologies such as Software-Defined Networking (SDN) [1], Network Function Virtualization (NFV) [2], and extended Berkeley Packet Filter (eBPF) [3] can certainly play a role in creating programmable data planes. eBPF in particular has gained significant attention in recent years for its ability to provide low-overhead, high-performance network processing in the Linux kernel.

Kubernetes is an open source container orchestration system that automates the deployment, scaling, and management of containerized applications. It provides a platform for deploying microservices-based applications in a cloud native manner, where each service can be independently deployed, scaled, and updated without impacting other services. On the other hand, a service mesh is a layer of infrastructure that provides fine-grained control over how microservices communicate with each other. It adds features such as traffic routing, load balancing, service discovery, and observability to microservices-based applications. A service mesh typically works by deploying a sidecar proxy alongside each microservice, which intercepts and routes network traffic.

Kubernetes and service mesh platforms can provide a higher level of abstraction for creating programmable data planes. Both of these platforms abstract network communication between microservices and offer APIs and libraries for managing network communication via different network policies. This abstraction simplifies the implementation of programmable data planes and leverages programmable network technologies such as eBPF to offer precise control over network behavior. This article focuses on the role of eBPF in creating effective programmable data planes in combination with the Kubernetes platform. However, it’s important to note that not all service meshes utilize lower level technologies such as eBPF.

eBPF

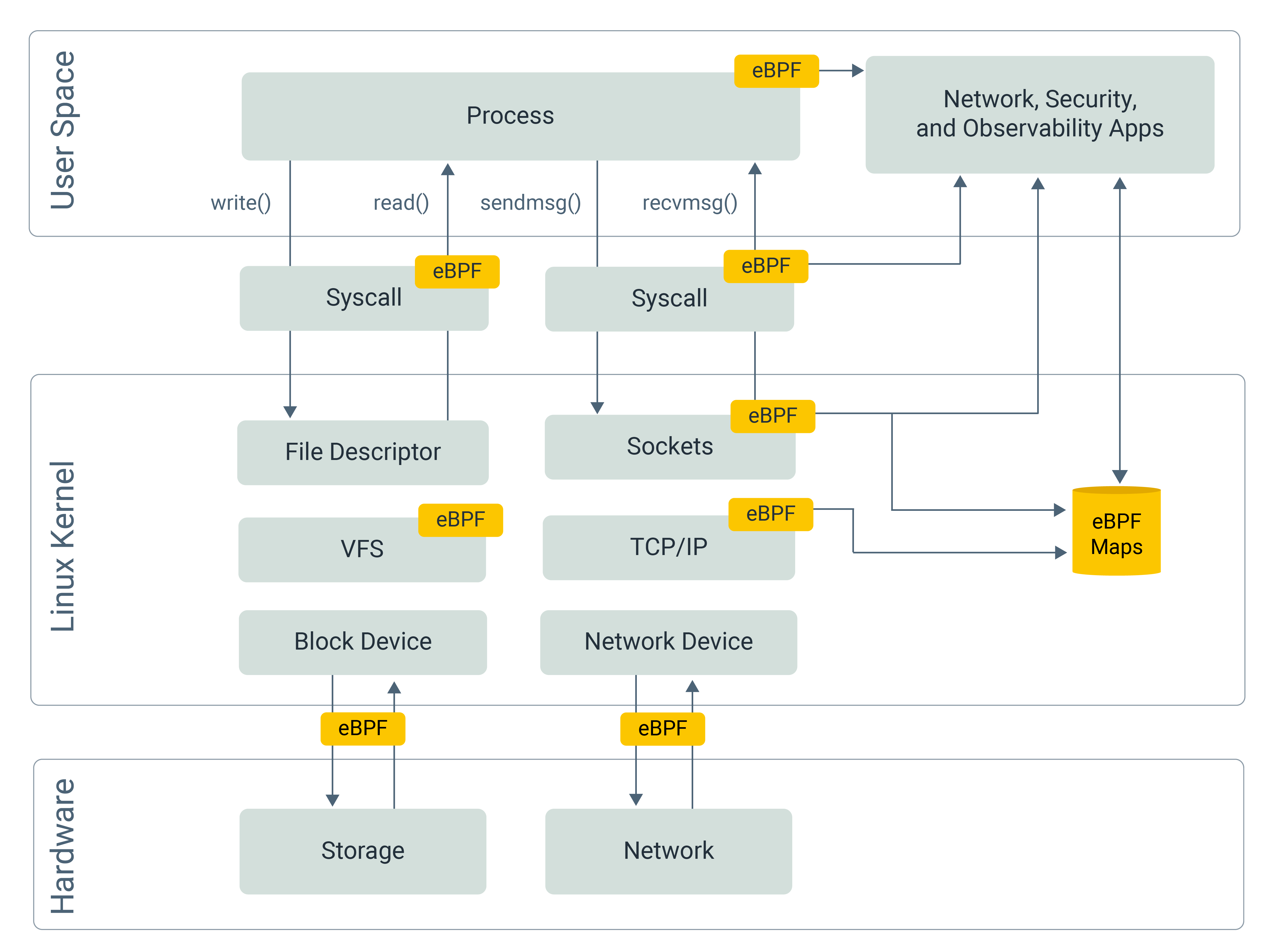

eBPF is a Linux kernel technology that provides a way for user-space programs to interact with the kernel safely and efficiently. User-space programs are software programs that run outside of the operating system's kernel and in a separate part of memory known as the user space. These programs have fewer privileges and are isolated from the operating system's critical functions, which are reserved for the kernel space. It allows for attaching small, in-kernel programs, called eBPF programs (eBPF boxes in Figure 1), to various hooks in the kernel and using them to analyze and manipulate network packets, system calls, and other events.

Figure 1: eBPF Overview Diagram

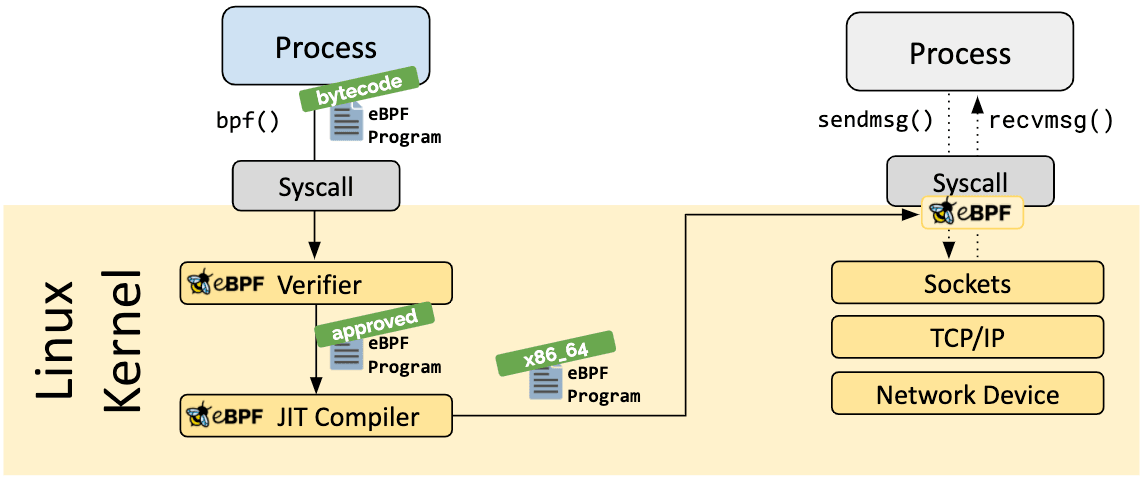

eBPF provides a high degree of programmability and ensures that the loaded programs are safe and do not cause the kernel to crash. eBPF loaded programs are executed in a restricted environment within the kernel, known as an eBPF sandbox. A sandbox ensures that the program cannot access or modify kernel data structures directly and can only interact with them through well-defined and safer APIs. Additionally, eBPF programs are verified for safety and correctness before they are loaded into the kernel using a just-in-time (JIT) compiler. The JIT compiler translates the eBPF bytecode into native machine code and checks for potential security vulnerabilities. If any issues are detected, the program is rejected, preventing it from being loaded into the kernel.

Figure 2: eBPF Program Verification - source [3]

This level of safety is particularly important in production environments, where stability and reliability are critical. eBPF's low overhead makes it well-suited for use in production container environments where performance is important.

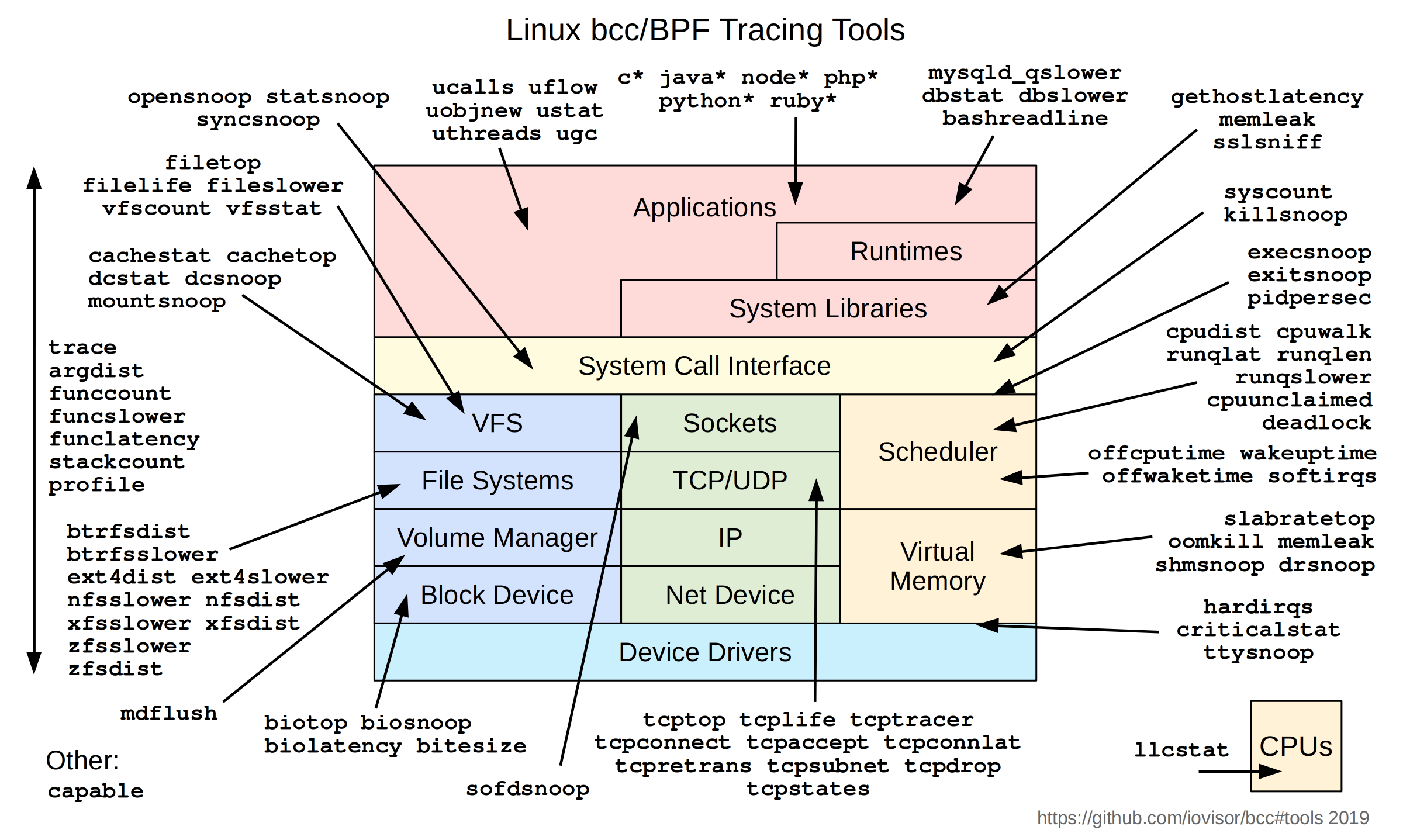

There are many different tools, such as BCC, Bpftrace, and libbpf (shown below), available for writing eBPF programs, and each come with its own strengths and weaknesses. The choice of tool depends on the specific use case and the programming language you’re most comfortable with.

BPF Compiler Collection (BCC) [4] provides a set of tools and libraries for creating eBPF programs in C and Python. BCC comes with a set of pre-built tools that can be used to monitor and debug various aspects of the system. To write custom eBPF programs using BCC, you can use the BPF C library or Python bindings. Figure 3 illustrates a set of tools provided by BCC, which is built on top of eBPF and allows developers to write and run custom eBPF programs.

Figure 3: Linux BCC/BPF tracing tools - source [4]

Bpftrace [5] is a high-level tracing language for eBPF that allows you to write complex tracing programs with ease. It provides a simple syntax for creating eBPF programs without the need to write low-level C or assembly code. Bpftrace can be used to trace system calls, kernel functions, and other events.

Libbpf [6] is a low-level library for creating eBPF programs in C. It provides a set of helper functions that simplify the process of loading and manipulating eBPF programs. Libbpf can be used to create eBPF programs for a variety of use cases, including tracing, monitoring, and security.

In general, eBPF was used for packet filtering in the kernel space, but more recently, eBPF has been extended to run in user space as well, which has opened up new possibilities for using eBPF in a wider range of use cases.

Cloud Native Network Functions

Traditional data planes typically rely on hardware-based network functions (e.g., routers and switches) to provide network services like routing, firewalling, and load balancing. These functions are often inflexible and require specialized hardware to perform. In contrast, programmable data planes use software-based network functions that can be programmed to perform a wide range of network functions. They can run on commodity hardware, making it easier to deploy network functions in virtualized or cloud environments. The use of eBPF can go beyond the general implementation of network function virtualization and can optimize network operations alignment with cloud native architecture as follows.

Service Discovery and Load Balancing

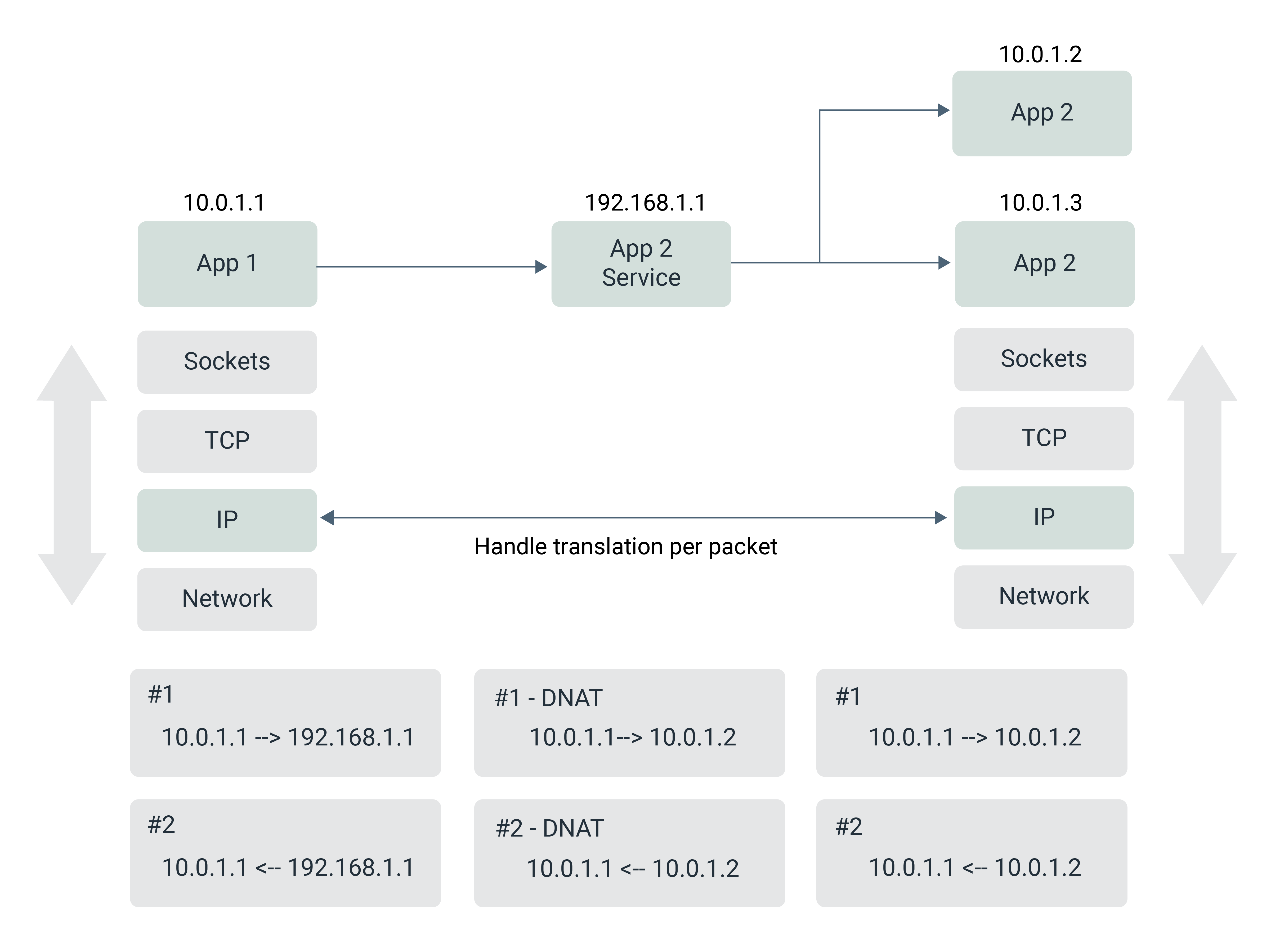

In Kubernetes, a service is an abstraction layer that provides a stable IP address for a set of pods. When a Kubernetes service is created, it is assigned a virtual IP address (also known as a ClusterIP) and a port number. This IP address is virtual in the sense that it does not correspond to a physical network interface on any node in the cluster. Instead, it’s used as a stable endpoint for the service, even as individual pods come and go.

When a client sends traffic to the service's IP address and port, the traffic is intercepted by a network proxy called a kube-proxy, which runs on every node in the cluster. The kube-proxy maintains a set of rules in the local iptables (or IPVS) table on each node that redirect traffic from the service's virtual IP address to one of the pods associated with the service.

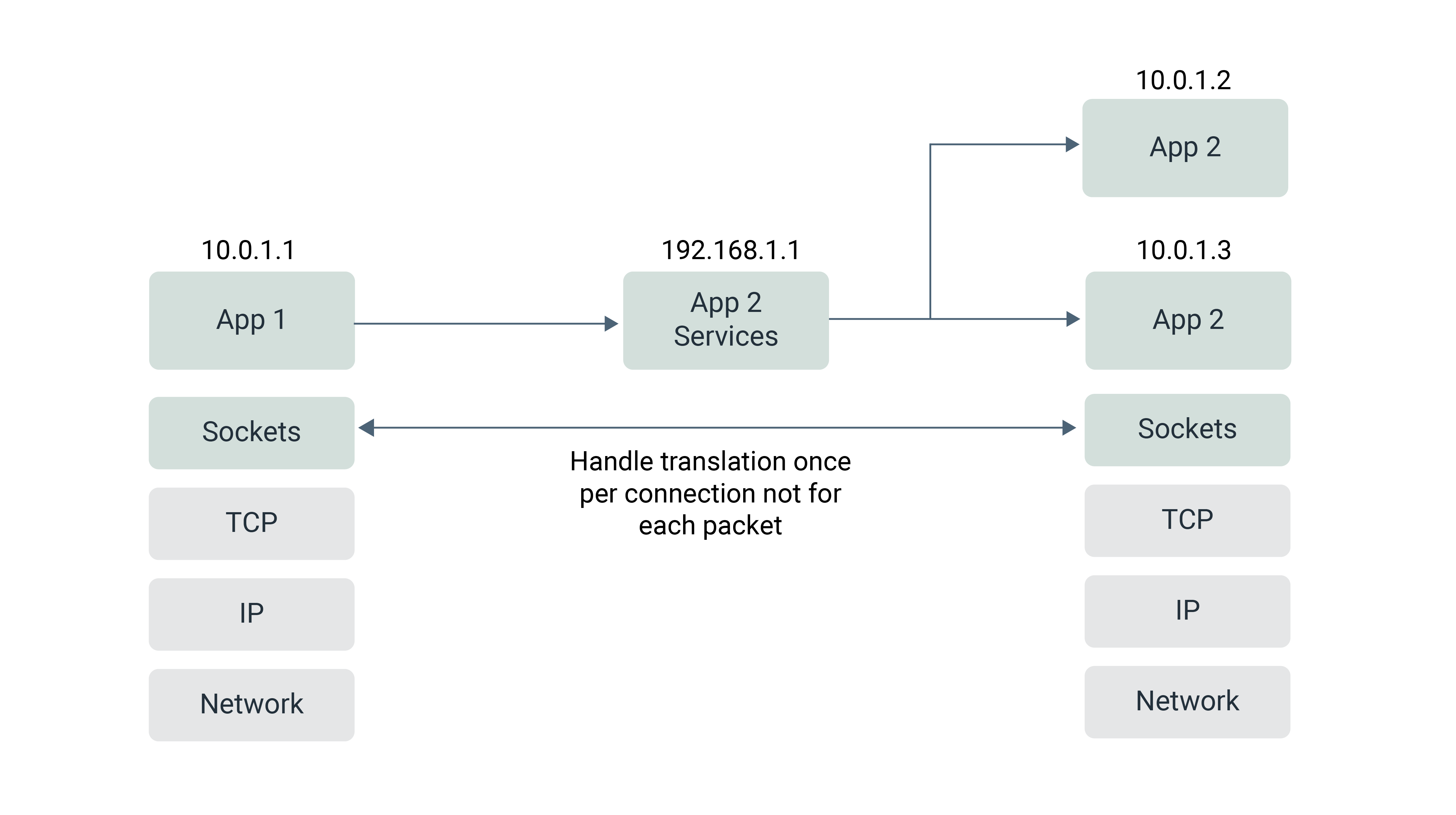

Figure 4: Network-based load balancing

The kube-proxy maintains a single iptables file containing all the rules for all the services and endpoints that it is managing. This can become a performance bottleneck and lead to scalability issues in large-scale clusters. In order to address the performance and scalability issues of the iptables-based kube-proxy, Cilium [7] (an eBPF-based networking and security solution for Kubernetes) provides an alternative socket-based load balancing mode of operation. In socket-based load balancing mode [8], Cilium creates a listener socket and binds it to the IP address of the service. As incoming packets arrive, Cilium inspects the packets to determine the appropriate service endpoint to route the traffic to and then uses eBPF to redirect the packet to the endpoint's socket.

Figure 5: Socket-based load balancing

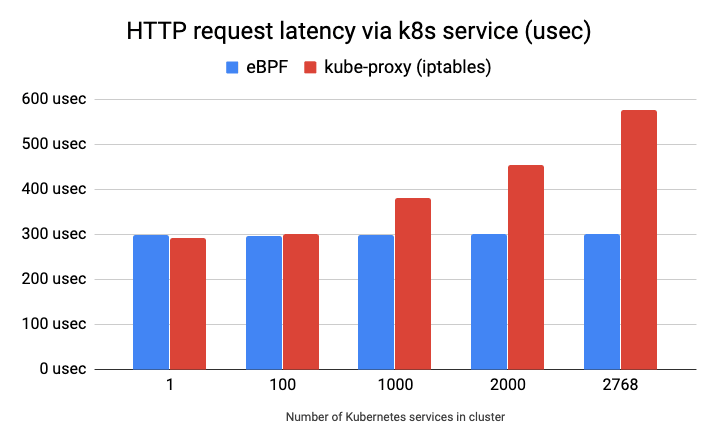

eBPF socket-based load balancing has better performance than iptables-based kube-proxy (see Figure 6). The use of eBPF allows for more efficient and fine-grained packet processing in the kernel, which can reduce latency and increase throughput. The following diagram shows some performance comparisons between iptable-based kube-proxy and eBPF socket-based load balancing.

Figure 6: Performance comparison kube-proxy (iptables) and eBPF based socket-based load balancer - source [8]

Identity-Based Network Security

Network security is important because it helps protect the communication and data flowing over a network from unauthorized access, interception, and modification. IP-based network security relies on controlling access based on IP addresses, which are assigned to devices on the network. For example, a firewall might allow or block access to a particular IP address or range of addresses.

However, IP-based security has limitations, as IP addresses can be easily spoofed or manipulated, making it difficult to determine the true identity of the user or application. This is especially problematic in Kubernetes environments, where applications are highly dynamic and ephemeral, with IP addresses that are constantly changing.

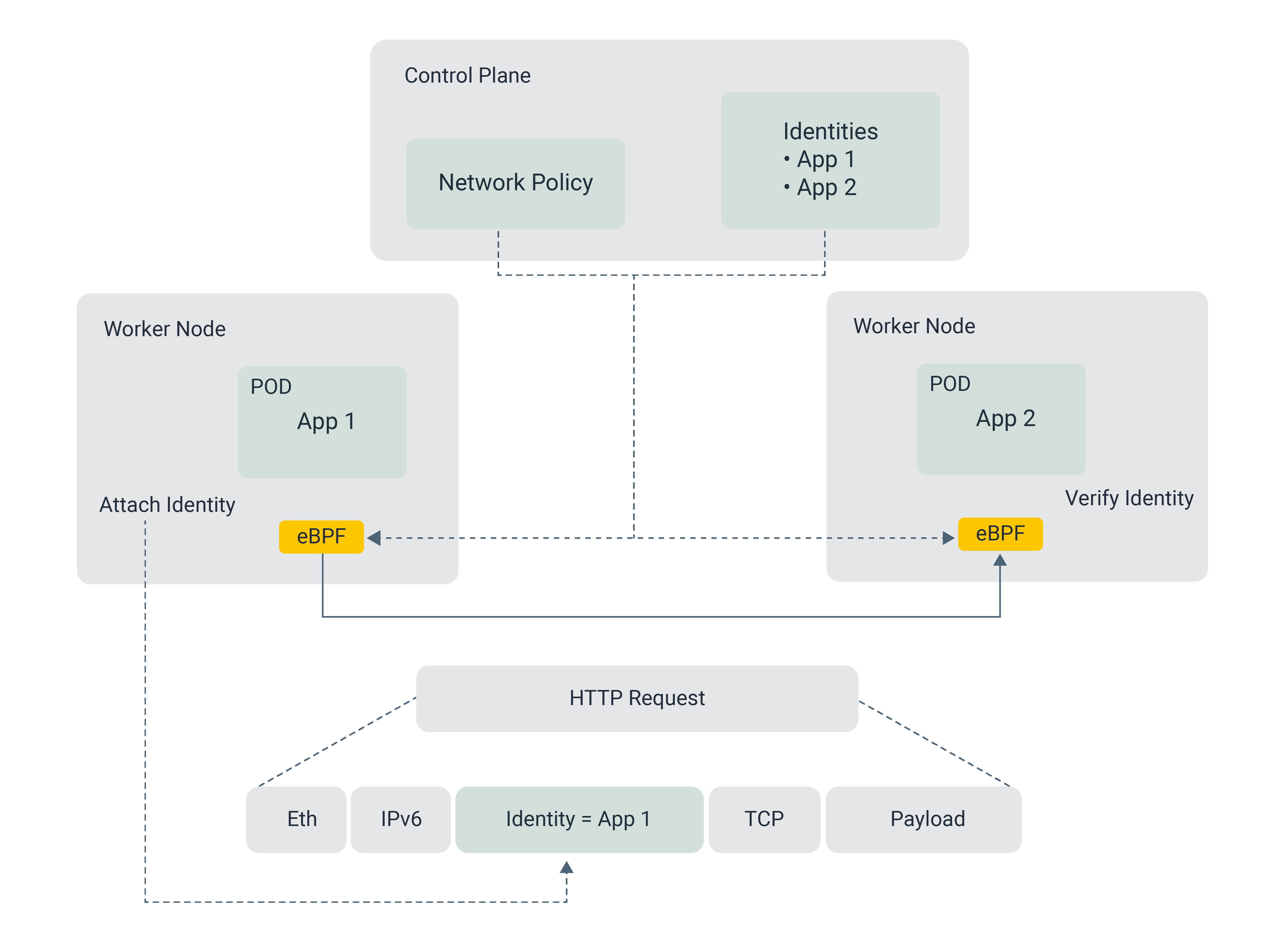

Figure 7: eBPF Identity-based security

eBPF identity-based policies can control egress calls from a pod by leveraging the rich set of metadata available in the eBPF maps. The metadata includes information about the pod and container, such as labels and annotations, and can be used to enforce security policies based on identity. This metadata can be used to match against policies that define who is allowed to access which services, and the policies can be enforced using eBPF programs loaded into the kernel.

For example, when a packet is sent from a pod, an eBPF program can extract the pod metadata from the packet, check if the pod is allowed to access the service, and then either permit or drop the packet accordingly. This provides a highly granular way to control access to services based on identity, rather than relying on IP addresses.

In addition to controlling egress traffic, eBPF can also enforce policies for ingress traffic at the destination pod. When a packet is received by a pod, an eBPF program can extract metadata from the packet and use it to enforce security policies. For example, the program can check if the packet is coming from an authorized source and is not carrying any malicious payload, and then either allow or block the packet accordingly.

Observability

Observability is the practice of gaining insight into the behavior of a system through various types of data, such as metrics, logs, and traces. Current observability practices often rely on manual instrumentation, which involves adding code to specific areas of a system to collect and analyze data. This process can be burdensome and time-consuming and may create a barrier to entry for organizations looking to implement observability in their environments.

Service mesh with sidecar proxies is a popular approach for providing observability in microservices environments. Sidecar proxies can automatically instrument the application code and generate telemetry data, which can be collected and analyzed by the mesh control plane to provide visibility into the application's behavior. However, this approach comes with the overhead of running a separate sidecar proxy for each service instance, which can impact performance and resource utilization.

eBPF provides an alternative approach to observability in service mesh environments by allowing kernel-level instrumentation. This means that telemetry data can be collected without the need for a separate sidecar proxy, reducing overhead and resource utilization. eBPF programs can be used to capture network traffic, inspect and modify packets, and generate telemetry data for analysis by a centralized observability platform. This allows for deep visibility into application behavior and the underlying infrastructure, without the overhead of a sidecar proxy.

Pixie and Hubble are tools that leverage eBPF technology to provide deeper observability in cloud native environments.

Pixie [9] is an open source observability platform that uses eBPF to automatically instrument applications and collect performance data without requiring any manual code instrumentation. It provides a real-time data processing pipeline that enables users to monitor their applications, troubleshoot performance issues, and analyze their data in real-time. Pixie also has a user-friendly interface that allows users to visualize their data in a variety of ways, making it easy to understand the health of their system.

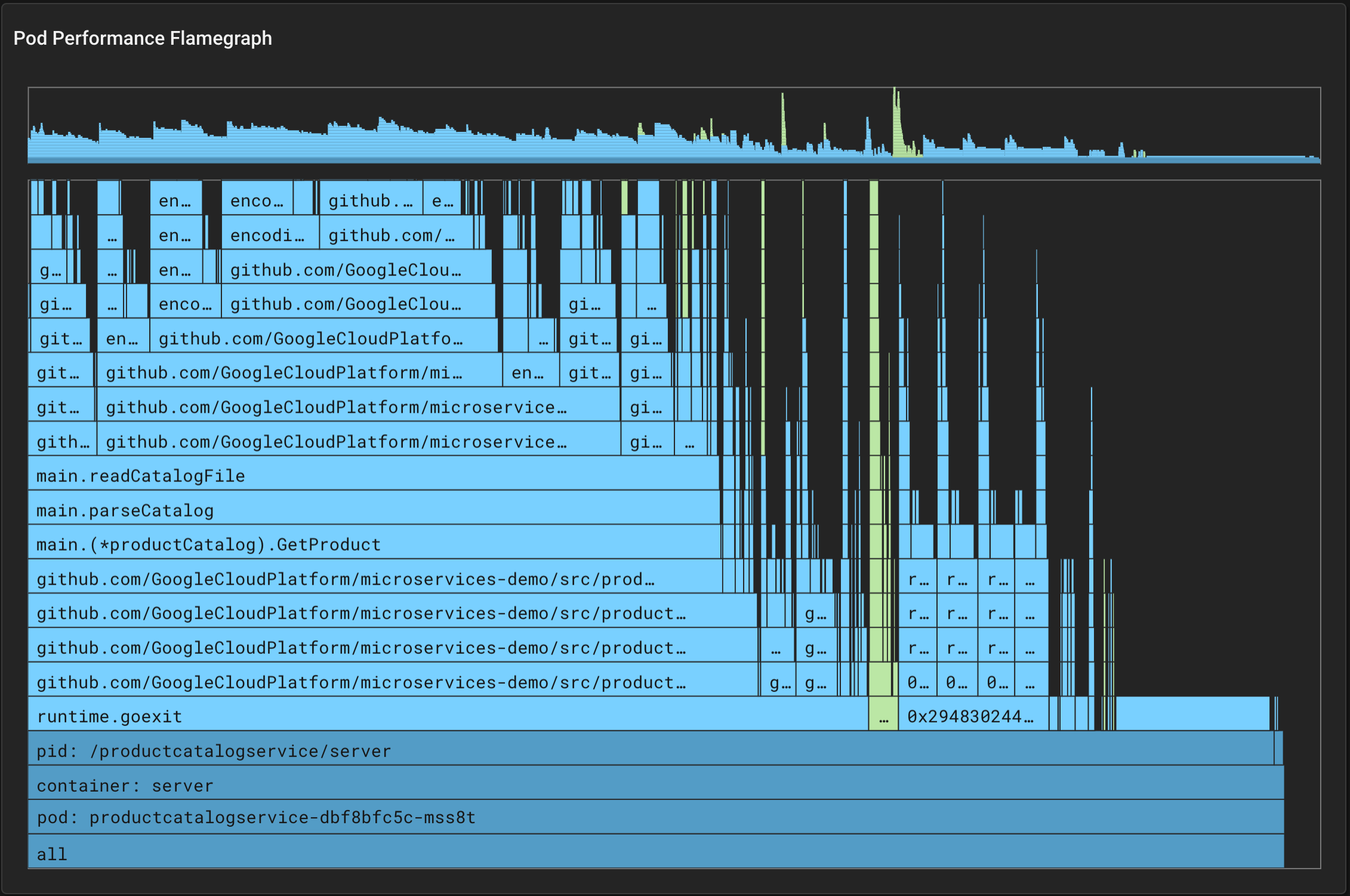

Figure 8: Pixie pod performance flamegraph - source [9]

Pixie's eBPF-based flame graph can help identify performance issues within the application code by providing a visual representation of where the time is being spent during execution. The flame graph can identify bottlenecks in the application code, such as functions or methods that are taking up a significant amount of time, and can help developers optimize their code to improve performance. Additionally, Pixie's flame graph can also identify issues related to system resources, such as CPU utilization, which can impact application performance.

Hubble [10] is an open-source network observability tool that uses eBPF to provide visibility into network traffic in Kubernetes environments. Hubble consists of a network visibility daemon that runs on each node and collects data using eBPF. The data is then exported to a central server, where it can be analyzed and visualized using the Hubble UI. Hubble enables users to quickly identify network issues and provides deep visibility into network activity, including packet-level details.

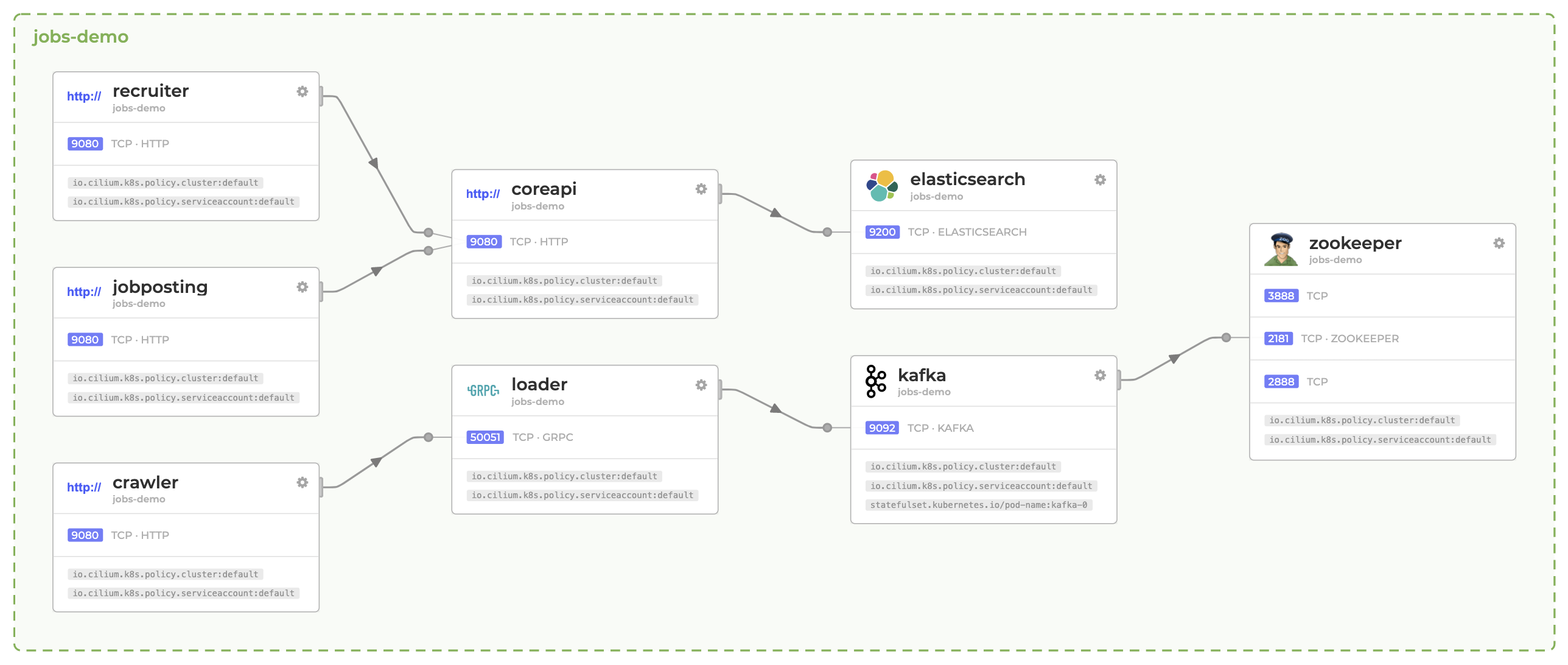

Figure 9 shows Hubble’s automatic service discovery and dependency graph, which allows operators to visualize the communication flow between services in a Kubernetes cluster without any manual configuration.

Figure 9: Hubble’s service dependency graph for Kubernetes - source [10]

Runtime Security

eBPF-based observability provides a powerful and efficient way to gather real-time insights into the behavior of cloud native applications running in production environments. By leveraging eBPF, operators can attach custom probes to the kernel to monitor and collect fine-grained telemetry data such as network traffic, system calls, and process activity. This data can then be analyzed in real-time to detect anomalies, identify security threats, and diagnose performance issues.

Falco [11] and Cilium Tetragon [12] are two popular open-source projects that leverage eBPF-based observability to provide runtime security for cloud native applications.

Falco operates in user space and kernel space, where the system calls are interpreted by using either a kernel module or an eBPF probe and analyzed using libraries in userspace. Suspicious events are then filtered using a rules engine where the Falco rules are configured, and alerts are sent to configured outputs, such as syslog, files, standard output, and others. Falco can be used to monitor containerized environments and is widely used to detect and prevent security incidents in cloud native infrastructures.

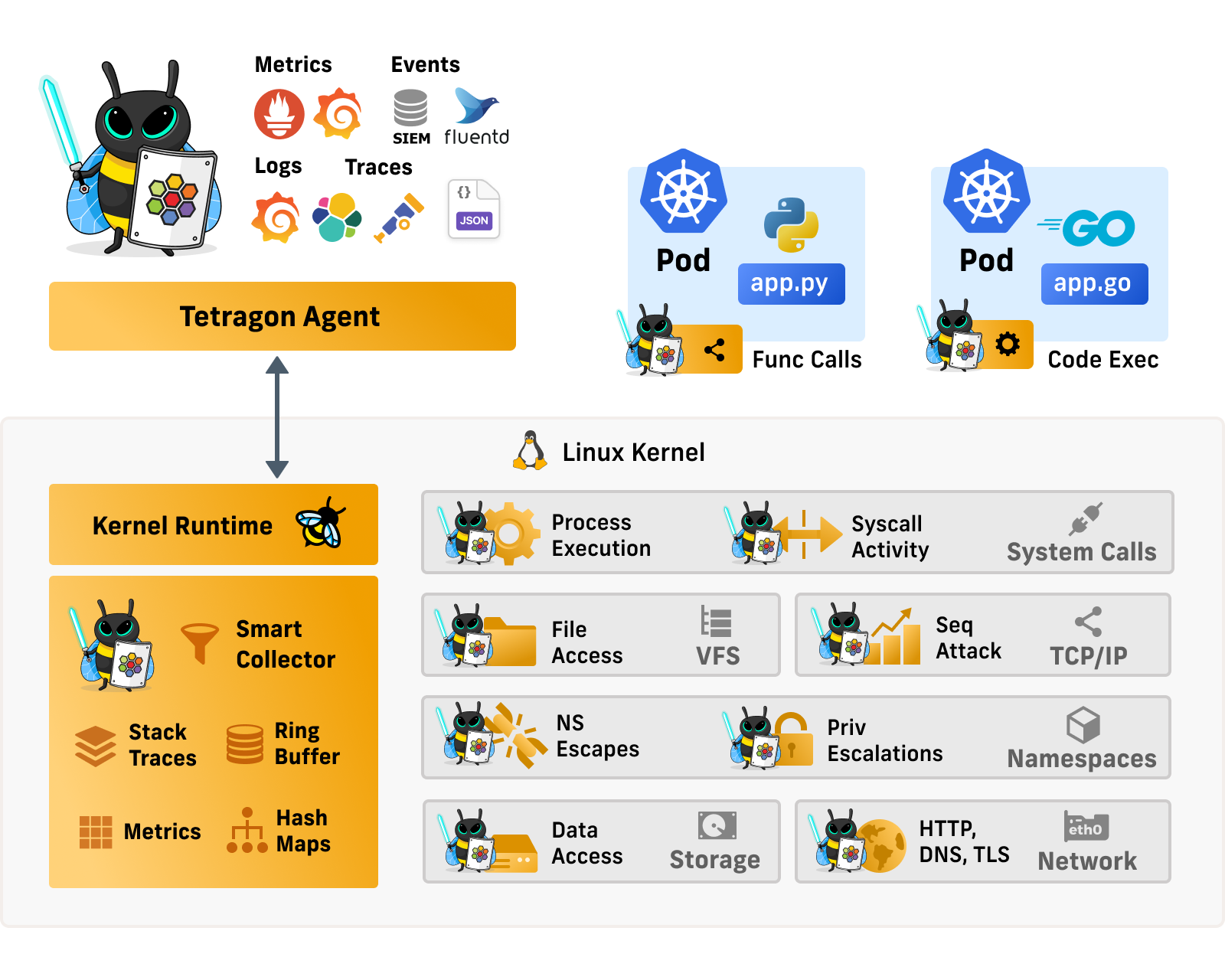

Cilium Tetragon provides a powerful observability layer that enables visibility into various system components, including low-level kernel activities such as tracking file accesses, network activity, and capability changes. It extends its coverage up to the application layer, allowing tracing of process execution, function calls into vulnerable libraries, and understanding of HTTP requests. Tetragon can also detect potential security threats such as namespace escapes, privilege escalations, file system and data access, and network activities using various protocols like HTTP, DNS, TLS, and TCP. Additionally, it provides auditing of system call invocation and process execution for enhanced security.

Figure 10: Cilium Tetragon runtime security over deep observability - source [12]

Simplifying Developer Experiences with Programmable Data Planes: The Choreo Advantage

With 18 years of experience in middleware product innovation and a commitment to empowering developers, WSO2 has always prioritized simplifying complexity and enhancing productivity for business application developers. Choreo is an internal developer platform-as-a-service that harnesses the power of programmable data planes. By abstracting complex technologies like Kubernetes and eBPF, Choreo allows developers to focus on their applications, which execute within an implicitly API-managed “cell architecture” environment. It offers two types of data planes: the Choreo Cloud Data Plane and the Choreo Private Data Plane.

The Choreo Cloud Data Plane operates on a multi-tenanted infrastructure, enabling the secure deployment of user applications in a shared environment. It harnesses the power of K8s and eBPF to efficiently handle network traffic with flexibility and security.

On the other hand, the Choreo Private Data Plane provides dedicated infrastructure exclusively allocated to organizations, ensuring privacy and control for applications with specific requirements and compliance needs.

Tap into the transformative capabilities of programmable data planes with Choreo. With Choreo’s data planes, powered by K8s and eBPF, developers can harness unprecedented power and flexibility. Choreo empowers you to unlock the true potential of your applications effortlessly and unleash innovation like never before.

Explore the capabilities of Choreo's programmable data planes.

Try Choreo for FreeKey Takeaways

- Programmable data planes are critical to enhance network communication for cloud native applications and offer precise control over network behavior such as traffic management, security, and observability.

- eBPF is a high-performance technology that enables code to be executed efficiently within the Linux kernel. It can be used to implement various network functions, including firewall rules, traffic analysis, and routing, to improve the performance, security, and efficiency of network communication.

- Kubernetes and service meshes are widely used to construct control planes and data planes. These platforms abstract network communication between microservices and offer APIs and libraries for managing network communication. This abstraction simplifies the implementation of programmable data planes and leverages programmable network technologies such as eBPF to offer precise control over network behavior.

- Cilium is a network plugin for Kubernetes that utilizes eBPF to replace the traditional kube-proxy component. eBPF allows Cilium to program the Linux kernel directly, without the need for iptables, and to perform more advanced load balancing and network policy enforcement functions.

- Also, Cilium uses eBPF to implement identity-based security by leveraging eBPF to extract the identity of the workload, such as the Kubernetes Pod, and then using this identity to enforce security policies. This approach enables Cilium to move beyond traditional IP-based security, which relies on IP addresses to identify workloads, and instead use higher-level constructs such as Kubernetes Pod and Service identities.

- eBPF provides deep observability in cloud native platforms by allowing users to trace and analyze various events happening at different layers of the system, including the kernel, networking, and application layers. With eBPF, users can create custom probes and tracepoints to collect detailed information on system calls, network packets, and other events, without manual instrumentations.

- eBPF-based runtime security can help operators detect and respond to security threats in real-time. By using eBPF to instrument system calls, network traffic, and other events, operators can monitor the system for suspicious behavior and take action to prevent attacks before they cause damage.

- eBPF is a crucial technology for creating programmable data planes as it enables efficient execution of code within the Linux kernel to implement network functions such as firewall rules, traffic analysis, and routing. Various open source projects, such as Cilium, Pixie, and Falco, are built on top of eBPF, offering different solutions for creating effective programmable data planes.

- Choreo is a cloud-based platform that utilizes programmable data planes to streamline application development. Offering a secure, multi-tenanted cloud data plane and a private data plane with dedicated infrastructure, Choreo emphasizes simplicity and productivity. Leveraging technologies like Kubernetes and eBPF allows developers to focus on application logic, fostering effortless innovation.

References

[1] https://opennetworking.org/sdn-definition/

[2] https://www.etsi.org/technologies/nfv

[3] https://ebpf.io/what-is-ebpf/

[4] https://github.com/iovisor/bcc

[5] https://github.com/iovisor/bpftrace

[6] https://github.com/libbpf/libbpf

[8] https://cilium.io/blog/2019/08/20/cilium-16/#kubeproxy-removal

[10] https://github.com/cilium/hubble

[11] https://falco.org/